|

| Decadal Survey of Biological Physics/ Physics of Living Systems. A report by the National Academies. |

If you absolutely don’t have time to read the entire 315-page report, at least look at the 2-page Executive Summary. It begins with this delightful sentence:

“Biological physics, or the physics of living systems, brings the physicist’s style of inquiry to bear on the beautiful phenomena of life.”Russ Hobbie and I try to capture the “physicist’s style” in IPMB, using toy models, quantitative analysis, and connections to simple physics principles. When I taught biology students in my Biological Physics and Medicine Physics classes at Oakland University, one of the greatest challenges was in getting these students comfortable with the physics style.

The Introduction and Overview of the report emphasizes how biological physicists “turn qualitative impressions into quantitative measurements, taming the complexity and organizing the diversity of life.” I think that “taming the complexity” lies at the heart of what physicists offer to biology. I know some biologists think we go to far in our quest to simplify, but I view this effort as essential to understanding the unity of life.

Part I explores four “big questions” facing biological physics

1. What physics problems do organisms need to solve?

2. How do living systems represent and process information?

3. How do macroscopic functions of life emerge from interactions among many microscopic constituents?

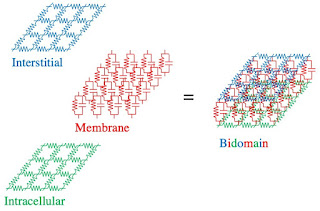

4. How do living systems navigate parameter space?The view that organisms need to solve physics problems is extraordinarily useful. Perhaps we don’t focus enough on information, but often consideration of information takes you into discussions of the genome, a topic that neither Russ nor I had any expertise in (although we do cover bioelectricity in detail, which is how organisms transmit information through the nervous system). Emergent behavior is another area IPMB doesn’t explore in great detail, but we do show how a simple cellular automaton model provides insight into complex heart arrhythmias. At first I wasn’t sure what “navigating parameter space” meant, but then I thought of the mathematical models describing cardiac membrane behavior, with their dozens of parameters, and I realized how crucial it is to characterize such a complex system. I was particularly struck by how the report related “navigating parameter space” to evolution. One can think of evolution as a way to optimize the values of these many parameters in order to produce the desired emergent behaviors.

Part II explores connections between the physics of living systems and other fields of physics; biology and chemistry; and health, medicine, and technology. Russ and I focus on the connection to health and medicine, which means IPMB bridges the boundary between biological physics and medical physics. I believe one of the strengths of our book, and one of the strengths of biological physics as a discipline, is the integrated view it provides of many diverse scientific fields. I was particularly fascinated by the report’s discussion of how “results and methods from the biological physics community have been central in the world’s response to the COVID-19 pandemic.” The report concludes that “biological physics now has emerged fully as a field of physics, alongside more traditional fields of astrophysics and cosmology, atomic, molecular and optical physics, condensed matter physics, nuclear physics, particle physics, and plasma physics.”

Part III addresses challenges that the physics of living systems faces in the future. The one most relevant to IPMB is in educating the next generation of biological physicists. The report states

Although teaching physics of course involves teaching particular things, there is a unique physics culture at the core of our teaching. This culture emphasizes general principles, and the use of these principles to predict the behavior of specific systems; the importance of numerical facts about the world, and how these facts are related to one another through the general principles; the value of idealization and simplification, sometimes even to the point of over-simplification; and the deep connections between distant subfields of physics. It is vital that this unifying culture is transmitted to students in biological physics.

That paragraph summarizes what Russ and I have tried to do in our book: develop a culture that emphasizes general principles. I don’t know how well we have succeeded, but it’s a crucial goal of Intermediate Physics for Medicine and Biology.

I can only begin to scratch the surface of what is in this Decadal Survey. Congratulations to the committee, and in particular the chair William Bialek, for this effort. I’ll end with the lovely last sentence of the executive summary:

Ultimately, a mature physics of life will change our view of ourselves as humans.