|

| Intermediate Physics for Medicine and Biology | . |

This week I spent three days in

Las Vegas.

I know you’ll be shocked...

shocked!...to hear there is

gambling going on in Vegas. If you want to improve your odds of winning, you need to understand

probability.

Russ Hobbie and I discuss probability in

Intermediate Physics for Medicine and Biology. The most engaging way to introduce the subject is through analyzing

games of chance. I like to choose a game that is complicated enough to be interesting, but simple enough to explain in one class. A particularly useful game for teaching probability is

craps.

The rules: Throw two

dice. If you role a seven or eleven you win. If you role a two, three, or twelve you lose. If you role anything else you keep rolling until you either “make your point” (get the same number that you originally rolled) and win, or “crap out” (roll a seven) and lose.

Two laws are critical for any probability calculation.

- For independent events, the probability of both event A and event B occurring is the product of the individual probabilities: P(A and B) = P(A) P(B).

- For mutually exclusive events, the probability of either event A or event B occurring is the sum of the individual probabilities: P(A or B) = P(A) + P(B).

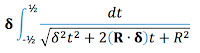

|

| Snake Eyes. |

For instance, if you roll a single die, the probability of getting a one is 1/6. If you roll two dice (independent events), the probability of getting a one on the first die and a one on the second (

snake eyes) is (1/6) (1/6) = 1/36. If you roll just one die, the probability of getting either a one or a two (mutually exclusive events) is 1/6 + 1/6 = 1/3. Sometimes these laws operate together. For instance, what are the odds of rolling a seven with two dice? There are six ways to do it: roll a one on the first die and a six on the second die (1,6), or (2,5), or (3,4), or (4,3), or (5,2), or (6,1). Each way has a probability of 1/36 (the two dice are independent) and the six ways are mutually exclusive, so the probability of a seven is 1/36 + 1/36 + 1/36 + 1/36 + 1/36 + 1/36 = 6/36 = 1/6.

|

| Boxcars. |

Now let

’s analyze craps. The probability of winning immediately is 6/36 for a seven plus 2/36 for an eleven (a five and a six, or a six and a five), for a total of 8/36 = 2/9 = 22%. The probability of losing immediately is 1/36 for a two, plus 2/36 for a three, plus 1/36 for a twelve (

boxcars), for a total of 4/36 = 1/9 = 11%. The probability of continuing to roll is….we could work it out, but the sum of the probabilities must equal 1 so a shortcut is to just calculate 1 – 2/9 – 1/9 = 6/9 = 2/3 = 67%.

The case when you continue rolling gets interesting. For each additional roll, you have three possibilities:

- Make you point and win with probability a,

- Crap out and lose with probability b, or

- Roll again with probability c.

What is the probability that, if you keep rolling, you make your point before crapping out? You could make your point on the first additional roll with probability

a; you could roll once and then roll again and make your point on the second additional roll with probability

ca; you could have three additional rolls and make your point on the third one with probability

cca, etc. The total probability of making your point is

a +

ca +

cca + … =

a (1 +

c +

c2 + …). The quantity in parentheses is the

geometric series, and can be evaluated in closed form: 1 +

c +

c2 + … = 1/(1 -

c). The probability of making your point is therefore

a/(1 -

c). We know that one of the three outcomes must occur, so

a +

b +

c = 1 and the odds of making your point can be expressed equivalently as

a/(

a +

b). If your original roll was a four, then

a = 3/36. The chance of getting a seven is

b = 6/36. So,

a/(

a +

b) = 3/9 = 1/3 or 33%. If your original roll was a five, then

a = 4/36 and the odds of making your point is 4/10 = 40%. If your original roll was a six, the likelihood of making your point is 5/11 = 45%. You can work out the probabilities for 8, 9, and 10, but you’ll find they are the same as for 6, 5, and 4.

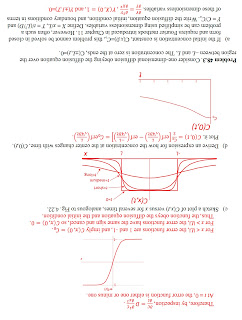

Now we have all we need to determine the probability of winning at craps. We have a 2/9 chance of rolling a seven or eleven immediately, plus a 3/36 chance of rolling a four originally followed by the odds of making your point of 1/3, plus…I will just show it as an equation.

P(winning) = 2/9 + 2 [ (3/36) (1/3) + (4/36) (4/10) + (5/36) (5/11) ] = 49.3 % .

The probability of losing would be difficult to work out from first principles, but we can take the easy route and calculate P(losing) = 1 – P(winning) = 50.7 %.

The chance of winning is almost even, but not quite. The odds are stacked slightly against you. If you play long enough, you will almost certainly lose on average. That is how

casinos in Las Vegas make their money. The odds are close enough to 50-50 that players have a decent chance of coming out ahead after a few games, which makes them willing to play. But when averaged over thousands of players every day, the casino always wins.

|

| Lady Luck, by Warren Weaver. |

I hope this analysis helps you better understand probability. Once you master the basic rules, you can calculate other quantities more relevant to

biological physics, such as

temperature,

entropy, and the

Boltzmann factor (for more, see Chapter 3 of

IPMB). When I teach

statistical thermodynamics or

quantum mechanics, I analyze craps on the first day of class. I arrive early and kneel in a corner of the room, throwing dice against the wall. As students come in, I invite them over for a game. It's a little creepy, but by the time class begins the students know the rules and are ready to start calculating. If you want to learn more about probability (including a nice description of craps), I recommend

Lady Luck by

Warren Weaver.

I stayed away from the craps table in Vegas. The game is fast paced and there are complicated side bets you can make along the way that we did not consider. Instead, I opted for

blackjack, where I turned $20 into $60 and then quit. I did not play the

slot machines, which are

random number generators with flashing lights, bells, and whistles attached. I am told they have worse odds than blackjack or craps.

The trip to Las Vegas was an adventure. My daughter Stephanie turned 30 on the trip (happy birthday!) and acted as our tour guide. We stuffed ourselves at one of the

buffets, wandered about

Caesar’s Palace, and saw the

dancing fountains in front of the

Bellagio. The show

Tenors of Rock at

Harrah's was fantastic. We did some other stuff too, but let’s not go into that (

What Happens in Vegas stays in Vegas).