I now better appreciate online access to textbooks. IPMB can be downloaded through the OU library catalog at no charge for anyone logging on as an OU student, faculty,or staff. I have access to other books online, and the number seems to be increasing every year.

| |

| Radiation Oncology: A Physicist's Eye View, by Michael Goitein. |

Goitein M (2008) Radiation Oncology: A Physicist’s Eye View. Springer, New York.It’s an excellent book, and you may hear more about it from me in the coming weeks. The preface begins:

This book describes how radiation is used in the treatment of cancer. It is written from a physicist’s perspective, describing the physical basis for radiation therapy, and does not address the medical rationale or clinical aspects of such treatments. Although the physics of radiation therapy is a technical subject, I have used, to the extent possible, non-technical language. My intention is to give my readers an overview of the broad issues and to whet their appetite for more detailed information, such as is available in textbooks.“Whetting their appetite” is also a goal of IPMB, and of this blog. In fact, education in general is a process of whetting the appetite of readers so they will go learn more on their own.

I particularly like Michael Goitein’s chapter on uncertainty. The lessons it provides apply far beyond radiation oncology. In fact, it’s a lesson that is never more relevant than during a pandemic. Enjoy!

UNCERTAINTY MUST BE MADE EXPLICIT

ISO [International Organization for Standardization] (1995) states that “the result of a measurement [or calculation] … is complete only when accompanied by a statement of uncertainty.” Put more strongly, a measured or computed value which is not accompanied by an uncertainty estimate is meaningless. One simply does not know what to make of it. For reasons which I do not understand, and vehemently disapprove of, the statement of uncertainty in the clinical setting is very often absent. And, when one is given, it is usually unaccompanied by the qualifying information as to the confidence associated with the stated uncertainty interval—which largely invalidates the statement of uncertainty.

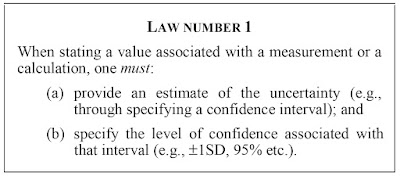

The importance of first estimating and then providing an estimate of uncertainty has led me to promulgate the following law:

|

| [Goitein's First Law of Uncertainty.] |

There is simply no excuse for violating either part of Law number 1. The uncertainty estimate may be generic, based on past experience with similar problems; it may be a rough “back-of-the-envelope” calculation; or it may be the result of a detailed analysis of the particular measurement. Sometimes it will be sufficient to provide an umbrella statement such as “all doses have an associated confidence interval of ±2% (SD) unless otherwise noted.” In any event, the uncertainty estimate should never be implicit; it should be stated.

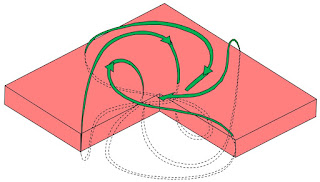

In graphical displays such as that of a dose distribution in a two- dimensional plane, the display of uncertainty can be quite challenging. This is for two reasons. First, it imposes an additional dimension of information which must somehow be graphically presented. And second because, in the case, for example, of the value of the dose at a point, the uncertainty may be expressed as either a numerical uncertainty in the dose value, or as a positional uncertainty in terms of the distance of closest approach. One approach to the display of dose uncertainty is shown in Figure 6.4 of Chapter 6.

HOW TO DEAL WITH UNCERTAINTY

To act in the face of uncertainty is to accept risk. Of course, deciding not to act is also an action, and equally involves risk. One’s decision as to what action to take, or not to take, should be based on the probability of a given consequence of the action and the importance of that consequence. In medical practice, it is particularly important that the importance assigned to a particular consequence is that of the patient, and not his or her physician. I know a clinician who makes major changes in his therapeutic strategy because of what I consider to be a trivial cosmetic problem. Of course, some patients might not find it trivial at all. So, since he assumes that all patients share his concern, I judge that he does not reflect the individual patient’s opinion very well. Parenthetically, it is impressive how illogically most of us perform our risk analyses, accepting substantial risks such as driving to the airport while refusing other, much smaller ones, such as flying to Paris (Wilson and Crouch, 2001). (I hasten to add that I speak here of the risk of flying, not that of being in Paris.)

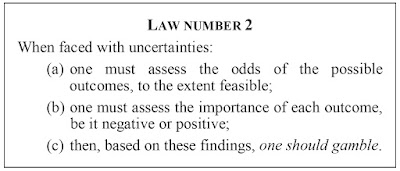

People are often puzzled as to how to proceed once they have analyzed and appreciated the full range of factors which make a given value uncertain. How should one act in the face of the uncertainty? Luckily there is a simple answer to this conundrum, which is tantamount to a tautology. Even though it may be uncertain, the value that you should use for some quantity as a basis for action is your best estimate of that quantity. It’s as simple as that. You should plunge ahead, using the measured or estimated value as though it were the “truth”. There is no more correct approach; one has to act in accordance with the probabilities. To reinforce this point, here is my second law:

|

| [Goitein's Second Law of Uncertainty.] |

It may seem irresponsible to promote gambling when there are life-or-death matters for a patient at stake; the word has bad connotations. But in life, since almost everything is uncertain, we in fact gamble all the time. We assess probabilities, take into account the risks, and then act. We have no choice. We could not walk through a doorway if it were otherwise. And that is what we must do in the clinic, too. We cannot be immobilized by uncertainty. We must accept its inevitability and make the best judgment we can, given the state [of] our knowledge.