|

| Selected Papers of Great American Physicists. |

The charge of an electron is encountered often in Intermediate Physics for Medicine and Biology. It’s one of those constants that’s so fundamental to both physics and biology that it’s worth knowing how it was first measured. Below is a new homework problem requiring the student to analyze data like that obtained by Millikan. I have designated it for Chapter 6, right after the analysis of the force on a charge in an electric field and the relationship between the electric field and the voltage. I like this problem because it reinforces several concepts already discussed in IPMB (Stoke's law, density, viscosity, electrostatics), it forces the student to analyze data like that obtained experimentally, and it provides a mini history lesson.

Section 6.4

Problem 11 ½. Assume you can measure the time required for a small, charged oil drop to move through air (perhaps by watching it through a microscope with a stop watch in your hand). First, record the time for the drop to fall under the force of gravity. Then record the time for the drop to rise in an electric field. The drop will occasionally gain or lose a few electrons. Assume the drop’s charge is constant over a few minutes, but varies over the hour or two needed to perform the entire experiment, which consists of turning the electric field on and off so one drop goes up and down.

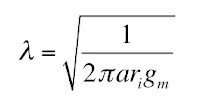

(a) When the drop falls with a constant velocity v1 it is acted on by two forces: gravity and friction given by Stokes’ law. When the drop rises at a constant velocity v2 it is acted on by three forces: gravity, friction, and an electrical force. Derive an expression for the charge q on your drop in terms of v1 and v2. Assume you know the density of the oil ρ, the viscosity of air η, the acceleration of gravity g, and the voltage V you apply across two plates separated by distance L to produce the electric field. These drops, however, are so tiny that you cannot measure their radius a. Therefore, your expression for q should depend on v1, v2, ρ, η, g, V, and L, but not a.

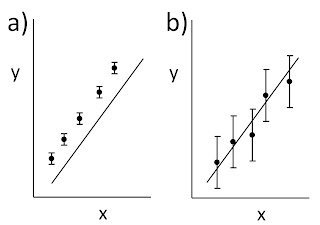

(b) You perform this experiment, and find that whenever the voltage is off the time required for the drop to fall 10 mm is always 12.32 s. Each time you turn the voltage on the drop rises, but the time to rise 10 mm varies because the number of electrons on the drop changes. Successive experiments give rise times of 162.07, 42.31, 83.33, 33.95, 18.96, and 24.33 s. Calculate the charge on the drop in each case. Assume η = 0.000018 Pa s, ρ = 920 kg m-3, V = 5000 V, L = 15 mm, and g = 9.8 m s-2.What impressed me most about Millikan’s paper was his careful analysis of sources of systematic error. He went to great pains to determine accurately the viscosity of air, and he accounted for small effects like the mean free path of the air molecules and the drop's buoyancy (effects you can neglect in the problem above). He worried about tiny sources of error such as distortions of the shape of the drop caused by the electric field. When I was a young graduate student, Millikan’s article provided my model for how you should conduct an experiment.

(c) Analyze your data to find the greatest common divisor for the charge on the drop, which you can take as the charge of a single electron. Hint: it may be easiest to look at changes in the drop’s charge over time.