“Earth teems with sights and textures, sounds and vibrations, smells and tastes, electric and magnetic fields. But every animal can only tap into a small fraction of reality’s fullness. Each is enclosed within its own unique sensory bubble, perceiving but a tiny sliver of our immense world.”

|

| An Immense World, by Ed Yong. |

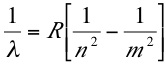

An Immense World sometimes overlaps with Intermediate Physics for Medicine and Biology. For example, both books discuss vision. Yong points out the human eye has better visual acuity than most other animals. He writes “we assume that if we can see it, they [other animals] can, and that if it’s eye-catching to us, it’s grabbing their attention… That’s not the case.” Throughout his book, Yong returns to this idea of how sensory perception differs among animals, and how misleading it can be for us to interpret animal perceptions from our own point of view.

Like IPMB, An Immense World examines color vision. Yong speculates about what a bee would think of the color red, if bees could think like humans.

Imagine what a bee might say. They are trichromats, with opsins that are most sensitive to green, blue, and ultraviolet. If bees were scientists, they might marvel at the color we know as red, which they cannot see and which they might call “ultrayellow” [I would have thought “infrayellow”]. They might assert at first that other creatures can’t see ultrayellow, and then later wonder why so many do. They might ask if it is special. They might photograph roses through ultrayellow cameras and rhapsodize about how different they look. They might wonder whether the large bipedal animals that see this color exchange secret messages through their flushed cheeks. They might eventually realize that it is just another color, special mainly in its absence from their vision.Both An Immense World and IPMB also analyze hearing. Yong says

Human hearing typically bottoms out at around 20 Hz. Below those frequencies, sounds are known as infrasound, and they’re mostly inaudible to us unless they’re very loud. Infrasounds can travel over incredibly long distances, especially in water. Knowing that fin whales also produce infrasound, [scientist Roger] Payne calculated, to his shock, that their calls could conceivably travel for 13,000 miles. No ocean is that wide.…In IPMB, Russ Hobbie and I introduce the decibel scale for measuring sound intensity, or how loud a sound is. Yong uses this concept when discussing bats.

Like infrasound, the term ultrasound… refers to sound waves with frequencies higher than 20 kHz, which marks the upper limit of the average human ear. It seems special—ultra, even—because we can’t hear it. But the vast majority of mammals actually hear very well into that range, and it’s likely that the ancestors of our group did, too. Even our closest relatives, chimpanzees, can hear close to 30 kHz. A dog can hear 45 kHz; a cat, 85 kHz; a mouse, 100 kHz; and a bottlenose dolphin, 150 kHz. For all of these creatures, ultrasound is just sound.

The sonar call of the big brown bat can leave its mouth at 138 decibels—roughly as loud as a siren or jet engine. Even the so-called whispering bats, which are meant to be quiet, will emit 110-decibel shrieks, comparable to chainsaws and leaf blowers. These are among the loudest sounds of any land animal, and it’s a huge mercy that they’re too high-pitched for us to hear.

Yong examines senses that Russ and I never consider, such as smell, taste, surface vibrations, contact, and flow. He wonders about the relative value of nociception [a reflex action to avoid a noxious stimulus] and the sensation of pain [a subjective feeling created by the brain].

The evolutionary benefit of nociception is abundantly clear. It’s an alarm system that allows animals to detect things that might harm or kill them, and take steps to protect themselves. But the origin of pain, on top of that, is less obvious. What is the adaptive value of suffering?

On the continuum ranging from life’s unity to diversity, Yong excels at celebrating the diverse, while Russ and I focus on how physics reveals unifying principles. I’m sometimes frustrated that Yong doesn’t delve into the physics of these topics more, but I am in awe of how he highlights so many strange and wonderful animals. There’s a saying that “nothing in biology makes sense except in light of evolution.” That’s true for An Immense World, which is a survey of how the evolution of sensory perception shapes they way animals interact, mate, hunt their prey, and avoid their predators.

Two chapters of An Immense World I found especially interesting were about sensing electric and magnetic fields. When discussing the black ghost knifefish’s ability to sense electric fields, Yong writes

Just as sighted people create images of the world from patterns of light shining onto their retinas, an electric fish creates electric images of its surroundings from patterns of voltage dancing across its skin. Conductors shine brightly upon it. Insulators cast electric shadows.Then he notes that

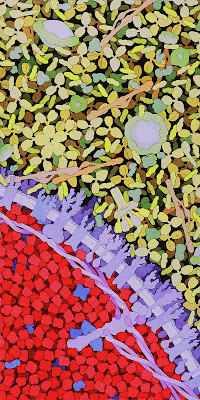

Fish use electric fields not just to sense their environment but also to communicate. They court mates, claim territory, and settle fights with electric signals in the same way other animals might use colors or songs.Even bees can detect electric fields. For instance, the 100 V/m electric field that exists at the earth’s surface can be sensed by bees.

Although flowers are negatively charged, they grow into the positively charged air. Their very presence greatly strengthens the electric fields around them, and this effect is especially pronounced at points and edges, like leaf tips, petal rims, stigmas, and anthers. Based on its shape and size, every flower is surrounded by its own distinctive electric field. As [scientist Daniel] Robert pondered these fields, “suddenly the question came: Do bees know about this?” he recalls. “And the answer was yes.”The chapter on sensing magnetic fields is different from the others, because we don’t yet know how animals sense these fields.

Magnetoreception research has been polluted by fierce rivalries and confusing errors, and the sense itself is famously difficult both to study and to comprehend. There are open questions about all the senses, but at least with vision, smell, or even electroreception, researchers know roughly how they work and which sense organs are involved. Neither is true for magnetoreception. It remains the sense that we know least about, even though its existence was confirmed decades ago.

Yong lists three possible mechanisms for magnetoreception: 1) magnetite, 2) electromagnetic induction, and 3) magnetic effects on radical pairs. Russ and I discuss the first two in IPMB. I get the impression that the third is Yong’s favorite, but I remain skeptical. In my book Are Electromagnetic Fields Making Me Ill? I say that “they jury is still out” on the radical pair hypothesis.

If you want to read a beautifully written book that explores how much of the physics in Intermediate Physics for Medicine and Biology can be used by species throughout the animal kingdom to sense their environment, I recommend An Immense World. You’ll love it.

Umwelt: The hidden sensory world of animals. By Ed Yong.

https://www.youtube.com/watch?v=Pzsjw-i6PNc

Ed Yong on An Immense World