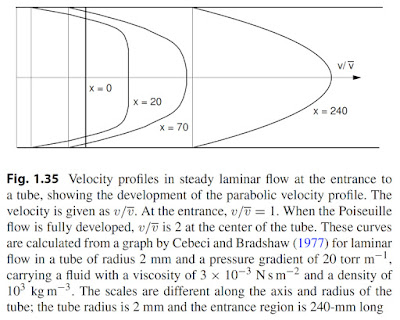

IPMB’s Figure 1.35 is shown below.The entry region causes deviations from Poiseuille flow in larger vessels. Suppose that blood flowing with a nearly flat velocity profile enters a vessel, as might happen when blood flowing in a large vessel enters the vessel of interest, which has a smaller radius. At the wall of the smaller vessel, the flow is zero. Since the blood is incompressible, the average velocity is the same at all values of x, the distance along the vessel. (We assume the vessel has a constant cross-sectional area.) However, the velocity profile v(r) changes with distance x along the vessel. At the entrance to the vessel (x = 0), there is a very abrupt velocity change near the walls. As x increases, a parabolic velocity profile is attained. The transition or entry region is shown in Fig. 1.35. In the entry region, the pressure gradient is different from the value for Poiseuille flow. The velocity profile cannot be calculated analytically in the entry region. Various numerical calculations have been made, and the results can be expressed in terms of scaled variables (see, for example, Cebeci and Bradshaw 1977). The Reynolds number used in these calculations was based on the diameter of the pipe, D = 2Rp, and the average velocity. The length of the entry region isL = 0.05DNR,D = 0.1RpNR,D = 0.2RpNR,Rp. (1.63)

This figure first appeared in the 3rd edition of IPMB, for which Russ was sole author. I got to wondering how he created it.

|

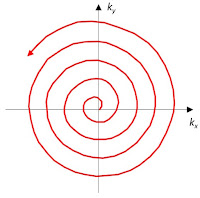

| Momentum Transfer in Boundary Layers, by Cebeci and Bradshaw. |

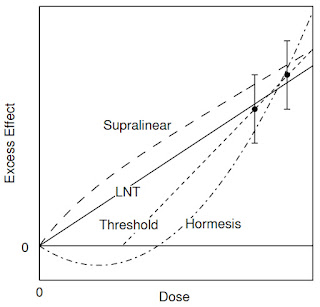

Figures 5.9 and 5.10 show the velocity profiles, u/u0, and the dimensionless centerline (maximum) velocity, uc/u0, as functions of 2x*/Rd in the entrance region of a pipe. Here x* = x/r0 and Rd = u0d/ν. Figure 5.10 also shows the measured values of the centerline velocity obtained by Pfenninger (1951). According to the results of Fig. 5.10, the centerline velocity has almost reached its asymptotic value of 2 at 2x*/Rd = 0.20. Thus the entrance length for a laminar flow in a circular pipe isTheir Fig. 5.9 is

le/d = Rd/20 (5.7.12)

|

| A photograph of Figure 5.9 from Momentum Transfer in Boundary Layers, by Cebeci and Bradshaw (1977). |

At the vessel entrance (x* = 0), the flow is uniform across its cross section with speed u0. The curve corresponding to 2x*/Rd = 0.531 looks almost exactly like Poiseuille flow, with the shape of a parabola and a maximum speed equal to 2u0. For the curve 2x*/Rd = 0.101 the flow is close to, but not exactly, Poiseuille flow. Cebeci and Bradshaw somewhat arbitrarily define the entrance length as corresponding to 2x*/Rd = 0.2.

The example that Russ analyzed in the caption to Fig. 1.35 corresponds to a large vessel such as the brachial artery in your upper arm. A diameter of 4 mm and an entrance length of 240 mm implies a Reynolds number of Rd = 1200. In this case, the entrance length is much greater than the diameter, and is similar to the length of the vessel. If we consider a small vessel like a capillary, however, we get a different picture. A typical Reynolds number for a capillary would be du0ρ/η = (8 × 10-6 m)(0.001 m/s)(1000 kg/m3)/(3 × 10-3 kg/m/s) = 0.0027, which implies an entrance length of about one nanometer. In other words, the parabolic flow distribution is established almost immediately, over a distance much smaller than the vessel’s length and even its radius. The entrance length is negligible in small vessels like capillaries.

Tuncer Cebeci is a Turkish-American mechanical engineer who worked for the Douglas Aircraft Company and was chair of the Department of Aerospace Engineering at California State University Long Beach. He has authored many textbooks in aeronautics, and developed the Cebeci-Smith model used in computational fluid dynamics. Peter Bradshaw was a professor in the Department of Aeronautics at the Imperial College London and then at Stanford, and is a fellow of the Royal Society. He developed the “Bradshaw Blower,” a type of wind tunnel use to study turbulent boundary layers.

Cebeci and Bradshaw describe why they wrote Momentum Transfer in Boundary Layers in their preface.

This book is intended as an introduction to fluid flows controlled by viscous or turbulent stress gradients and to modern methods of calculating these gradients. It is nominally self-contained, but the explanations of basic concepts are intended as review for senior students who have already taken an introductory course in fluid dynamics, rather than for beginning students. Nearly all stress-dominated flows are shear layers, the most common being the boundary layer on a solid surface. Jets, wakes, and duct flows are also shear layers and are discussed in this volume. Nearly all modern methods of calculating shear layers require the use of digital computers. Computer-based methods, involving calculations beyond the reach of electomechanical desk calculators, began to appear around 10 years ago... With the exception of one or two specialist books... this revolution has not been noticed in academic textbooks, although the new methods are widely used by engineers.This post illustrates how IPMB merely scratches the surface when explaining how physics impacts medicine and biology. Behind each sentence, each figure, and each reference is a story. I wish Russ and I could tell them all.