|

Structures: Or Why Things Don't Fall Down,

by James Gordon. |

In this third and final post about

James Gordon’s book

Structures: Or Why Things Don’t Fall Down, I analyze shear.

If tension is about pulling and compression is about pushing, then shear is about sliding. In other words, a shear stress measures the tendency for one part of a solid to slide past the next bit: the sort of thing which happens when you throw a pack of cards on the table or jerk the rug from under someone’s feet. It also nearly always occurs when anything is twisted, such as one’s ankle or the driving shaft of a car…

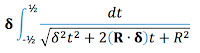

In

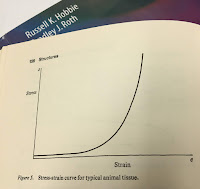

Intermediate Physics for Medicine and Biology,

Russ Hobbie and I introduce the

shear stress,

shear strain, and

shear modulus, but we don’t do much with them. After Gordon defines these quantities, however, he launches into to a fascinating discussion about shear and

anisotropy: different properties in different directions.

Cloth is one of the commonest of all artificial materials and it is highly anisotropic….If you take a square of ordinary cloth in your hands—a handkerchief might do—it is easy to see that the way in which it deforms under a tensile load depends markedly upon the direction in which you pull it. If you pull, fairly precisely, along either the warp or the weft threads, the cloth will extend very little; in other words, it is stiff in tension. Furthermore, in this case, if one looks carefully, one can see that there is not much lateral contraction as a result of the pull…Thus the Poisson’s ratio…is low.

However, if you now pull the cloth at 45° to the direction of the threads—as a dressmaker would say, ‘in the bias direction’—it is much more extensible; that is to say, Young’s modulus in tension is low. This time, though, there is a large lateral contraction, so that, in this direction, the Poisson’s ratio is high.

This analysis led me to ruminate about the different role of anisotropy in

bioelectricity versus

biomechanics. The mechanical behavior Gordon describes is different than the electrical conductivity of a similar material. As explained in Section 7.9 of

IPMB, the

current density and

electric field in an anisotropic material are related by a

conductivity tensor (Eq. 7.39). A cloth-like material would have the same conductivity parallel and perpendicular to the threads, and the off-diagonal terms in the tensor would be zero. Therefore, the conductivity tensor would be proportional to the

identity matrix. Homework Problem 26 in Chapter 4 of

IPMB shows how to write the tensor in a coordinate system rotated by 45°. The result is that the conductivity is the same in the 45° direction as it is along and across the fibers. As far as its electrical properties are concerned, cloth is isotropic!

I spent much of my career analyzing

anisotropy in cardiac muscle, and I was astonished when I realized how different anisotropy appears in mechanics compared to electricity. Gordon’s genius was to analyze a material, such as cloth, that has identical properties in two perpendicular directions, yet is nevertheless mechanically anisotropic. If you study muscle, which has different mechanical and electrical properties along versus across the fibers, the difference between mechanical and electrical anisotropy is not as obvious.

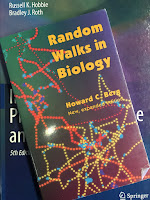

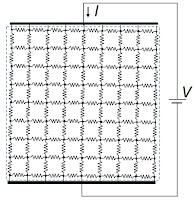

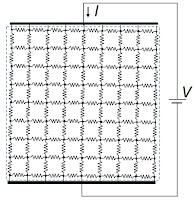

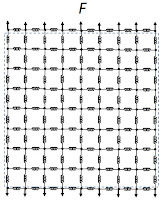

This difference got me thinking: is the electrical conductivity of a cloth-like material really isotropic? Well, yes, it must be when analyzed in terms of the conductivity tensor. But suppose we look at the material microscopically. The figure below shows a square grid of resistors that represents the electrical behavior of tissue. Each resistor is the same, having resistance

R. To determine its macroscopic resistance, we apply a voltage difference

V and determine the total current

I through the grid. The current must pass through

N vertical resistors one after the other, so the total resistance through one vertical line is

NR. However, there are

N parallel lines, reducing the total resistance by a factor of 1/

N. The net result: the resistance of the entire grid is the resistance of a single resistor,

R.

|

| The electrical behavior of tissue represented by a grid of resistors. |

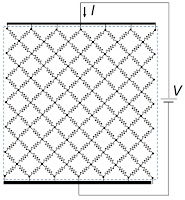

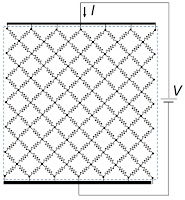

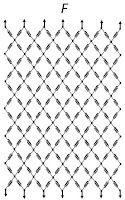

Now rotate the grid by 45°. In this case, the current takes a tortuous path through the tissue, with the vertical path length increasing by the square root of two. However, more vertical lines are present per unit length in the horizontal direction (count ’em). How many more? The square root of two more! So, the grid has a resistance

R. From a microscopic point of view, the conductivity is indeed isotropic.

|

| The electrical behavior of tissue represented by a rotated grid of resistors. |

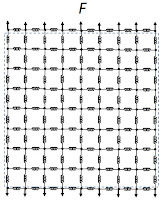

Next, replace the resistors by springs. When you pull upwards, the vertical springs stretch with a spring constant

k. Using a similar analysis as performed above, the

net spring constant of the grid is also

k.

|

| The mechanical behavior of tissue represented by a grid of springs. |

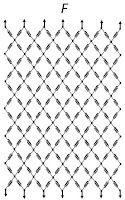

Now analyze the grid after it's been rotated by 45°. Even if the spring constant were huge (that is, if the springs were very stiff), the grid would stretch by shearing the rotated squares into diamonds. The tissue would have almost no Young’s modulus in the 45° direction and the

Poisson's ratio would be about one; the grid would contract horizontally as it expanded vertically (even if the springs themselves didn't stretch at all). This arises because the springs act as if they're connected by hinges. It reminds me of those

gates my wife and I installed to prevent our young daughters from falling down the steps. You would need horizontal

struts or vertical

ties to prevent such shearing.

|

| The mechanical behavior of tissue represented by a rotated grid of springs. |

In conclusion, you can't represent the mechanical behavior of an isotropic tissue using a square grid of springs without struts or ties. Such a microscopic structure corresponds to cloth, which is anisotropic. A square grid fails to capture properly the shearing of the tissue. You can, however, represent the electrical behavior of an isotropic tissue using a square grid of resistors without “electrical struts or ties.”

Gordon elaborated on the anisotropic mechanical properties of cloth in his own engaging way.

In 1922 a dressmaker called Mlle Vionnet set up shop in Paris and proceeded to invent the “bias cut.” Mlle Vionnet had probably never heard of her distinguished compatriot S. D. Poisson—still less of his ratio—but she realized intuitively that there are more ways of getting a fit than by pulling on strings…if the cloth is disposed at 45°…one can exploit the resulting large lateral contraction so as to get a clinging effect.

Wikipedia adds:

Vionnet's bias cut clothes dominated haute couture in the 1930s, setting trends with her sensual gowns worn by such internationally known actresses as Marlene Dietrich, Katharine Hepburn, Joan Crawford and Greta Garbo. Vionnet’s vision of the female form revolutionized modern clothing, and the success of her unique cuts assured her reputation.