The reconstruction problem can be stated as follows. A function f(x,y) exists in two dimensions. Measurements are made that give projections: the integrals of f(x,y) along various lines as a function of displacement perpendicular to each line…F(θ,x'), where x' is the distance along the axis at angle θ with the x axis. The problem is to reconstruct f(x,y) from the set of functions F(θ,x'). Several different techniques can be used… We will consider two of these techniques: reconstruction by Fourier transform […] and filtered back projection…. The projection at angle θ is integrated along the line y':In IPMB, Homework Problem 32 asks you can take the function (the “object”)

[where x = x' cosθ – y' sinθ and y = x' sinθ + y' cosθ]… The definition of the back projection is

where x' is determined for each projection using Eq. 12.27 [x' = x cosθ + y sinθ].

and calculate the projection using Eq. 12.29, and then calculate the back projection using Eq. 12.30. The object and the back projection are different. The moral of the story is that you cannot solve the tomography problem by back-projection alone. Before you back-project, you must filter.*

I like having two homework problems that illustrate the same point, one that I can do in class and another that I can assign to the students. IPMB contains only one example of projecting and then back-projecting, but recently I have found another. So, dear reader, here is a new homework problem; do this one in class, and then assign Problem 32 as homework.

Problem 32 ½ Consider the object f(x,y) = √(a2 − x2 − y2)/a for √(x2 +y2) less than a, and 0 otherwise.

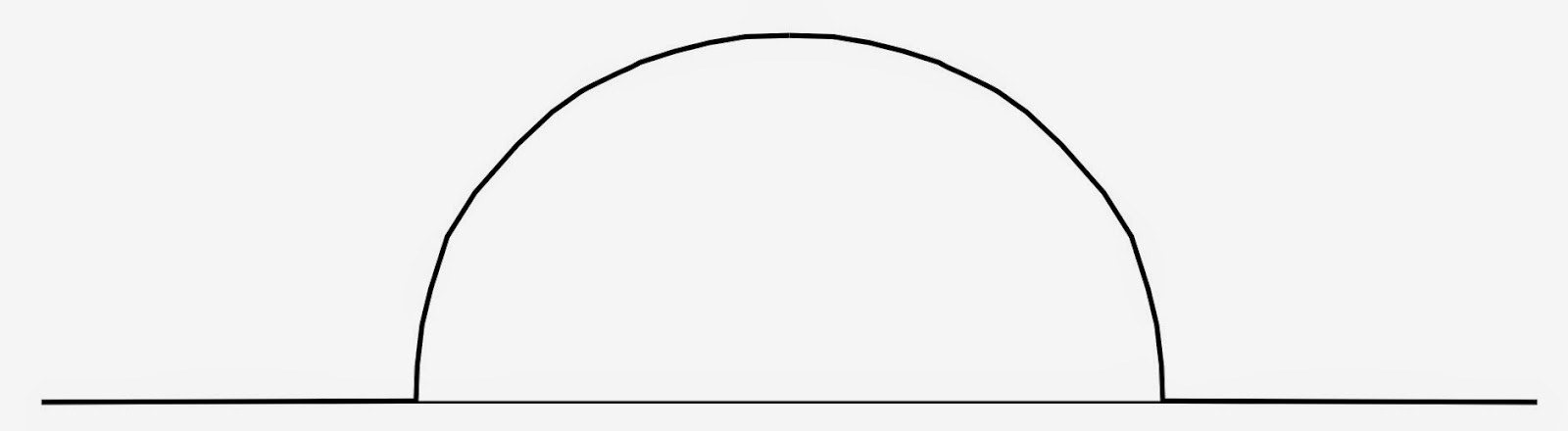

(a) Plot f(x,0) vs. x

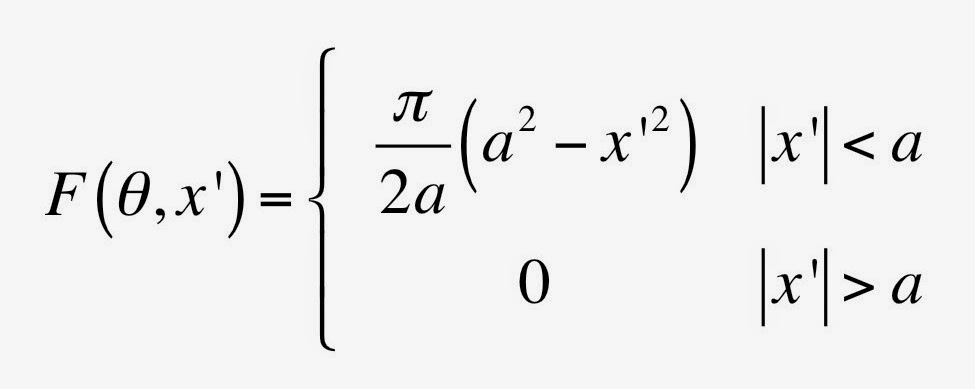

(b) Calculate the projection F(θ,x'). Plot F(0,x') vs. x'.

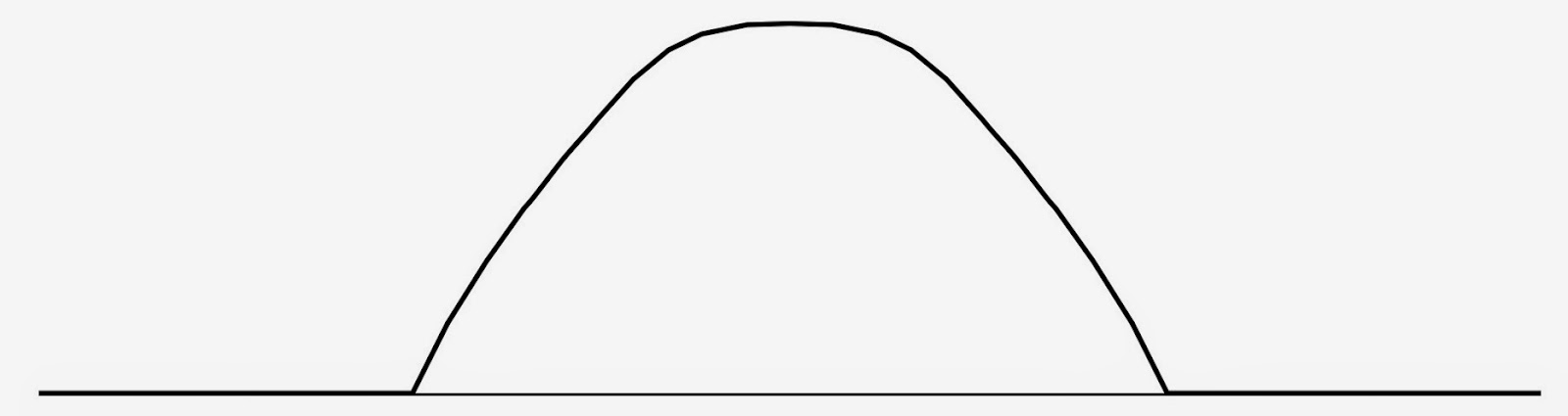

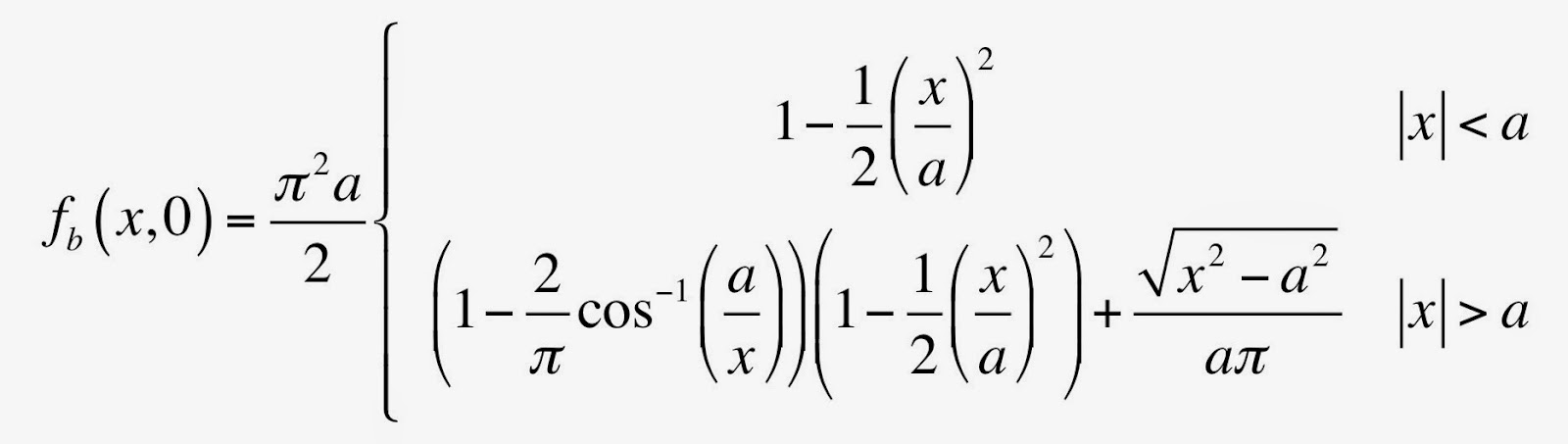

(c) Use the projection from part (b) to calculate the back projection fb(x,y). Plot fb(x,0) vs. x.

(d) Compare the object and the back projection. Explain qualitatively how they differ.The nice thing about this function

(as well as the function in Problem 32) is that f(x,y) does not depend on direction, so F(θ,x') is independent of θ; you can make you life easier and solve it for θ = 0. Similarly, you can calculate the back projection along any line through the origin, such as y = 0. I won’t solve Problem 32½ here in detail, but let me outline the solution.

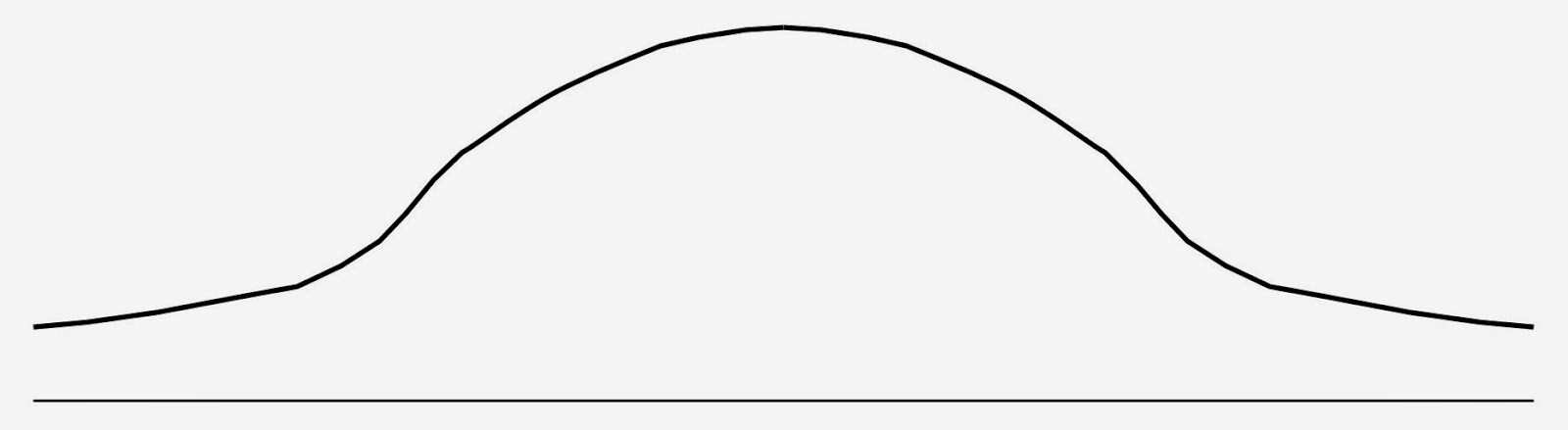

Below is a plot of f(x,0) versus x

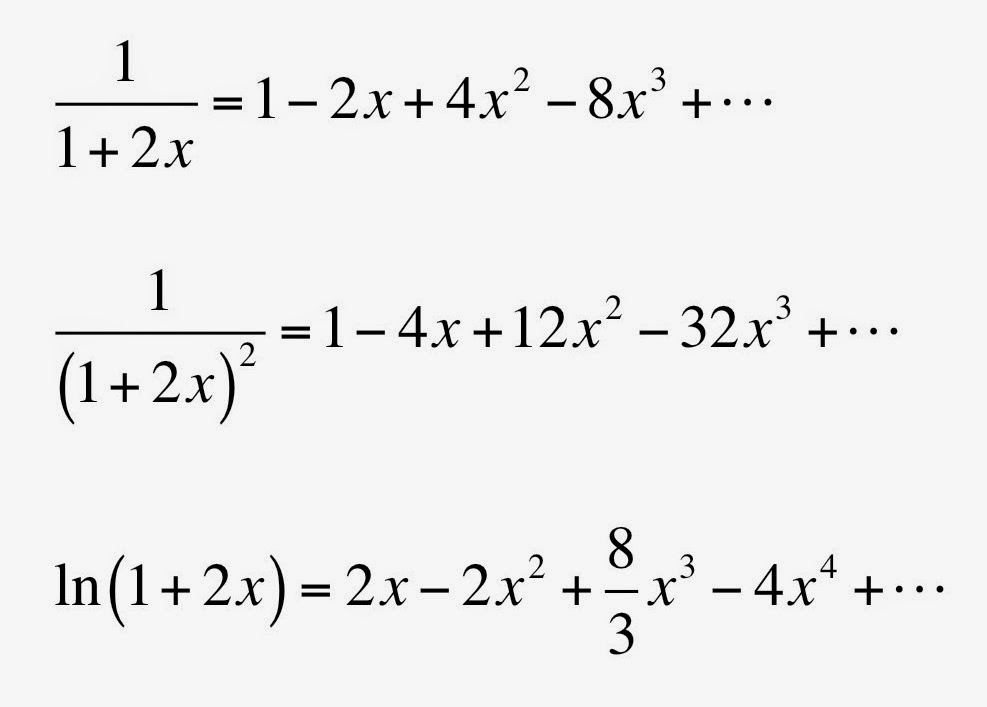

To take the projection in part (b) use θ = 0, so x' = x and y' = y. If |x| is greater than a, you integrate over a function that is always zero, so F(0,x') = 0. If |x| is less than a, you must do more work. The limits of the integral over y become −√(a2 – x2) to +√(a2 – x2). The integral is not too difficult (it’s in my mathematical handbook), and you get F(0,x) = π(a2 – x2)/2a. Because the projection is independent of θ, you can generalize to

The plot, an inverted parabola, is

In part (c) you need to find the back-projection. I suggest calculating it for the line y = 0. Once you have it, you can find it for any point by replacing x by √(x2 +y2). The back-projection for |x| less than a is easy. The integral in Eq. 12.30 gives another inverted parabola. For |x| is greater than a, the calculation is more complicated because some angles give zero and some don’t. A little geometry will convince you that the integral should range from cos-1(a/x) to π - cos-1(a/x). Because the function is even around π/2, you can make your life easier by multiplying by two and integrating from cos-1(a/x) to π/2. The only way I know to show how you get cos-1(a/x) is to draw a picture of a line through the point x greater than a, y = 0 that is tangent to the circle x2 + y2 = a2, and then do some trigonometry. When you then evaluate the integral you get

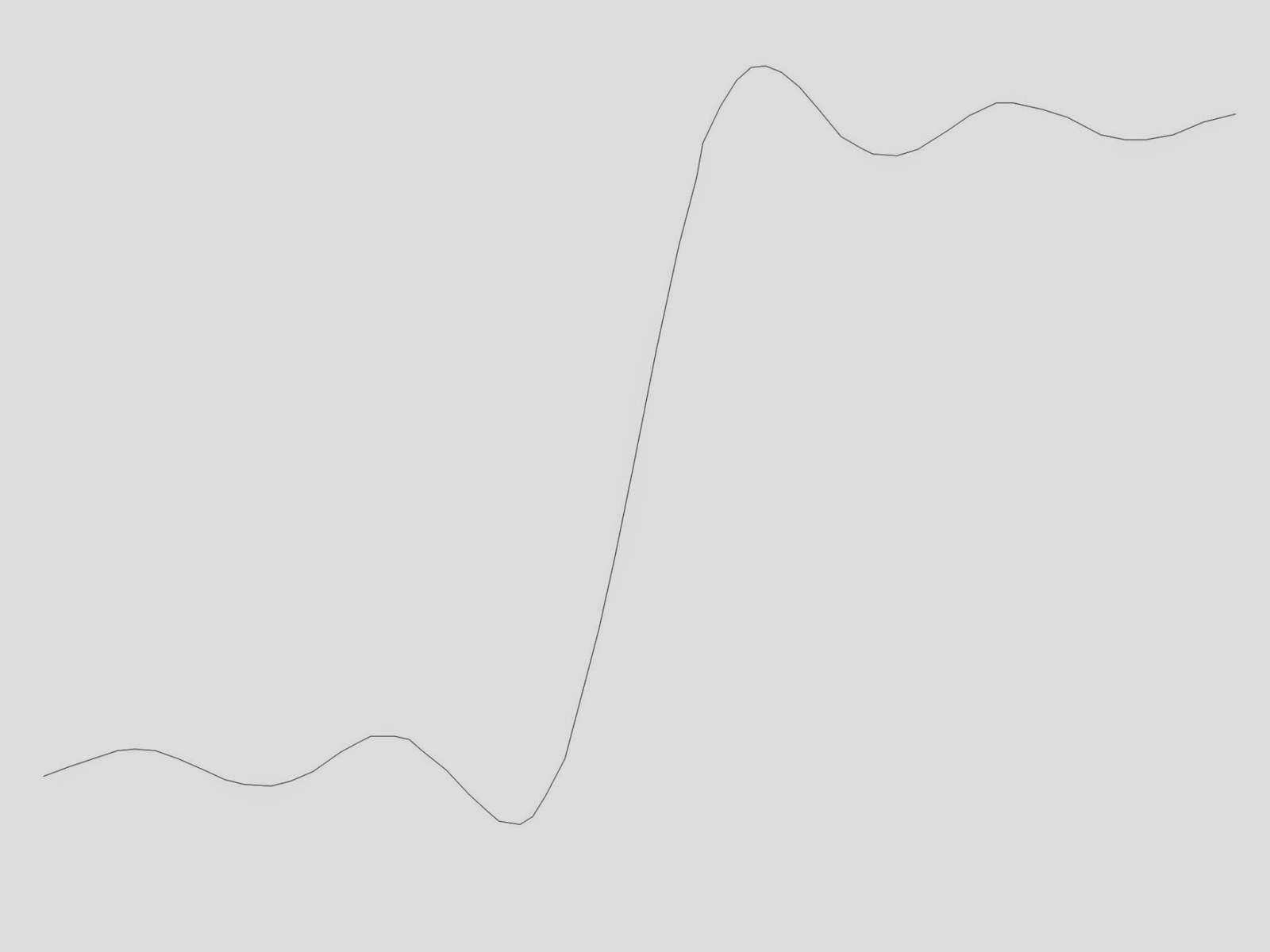

A plot of this complicated looking function is

To answer part (d), compare your plots in (a) and (c). The object in (a) is confined entirely inside the circle x2 + y2 = a2, but the back-projection in (c) spreads over all space. One could say the back-projection produced a smeared-out version of the object. Why are they not the same? We didn’t filter before we back projected.

If you really want to have fun, show that the limit of the back-projection goes to zero as x goes to infinity. This is surprisingly difficult—that last term doesn’t look like it’ll go to zero—and you need to keep more than one term in your Taylor series to make it work.

The back projection shown above looks similar to the back projection shown in Fig. 12.23 of IPMB. My original goal was to calculate the back projection in Fig. 12.23 exactly, but I got stuck trying to evaluate the integral

I sometimes ask myself: why do I assign these analytical examples of projections and back-projections? Anyone solving the tomography problem in the clinic will certainly use a computer. Is anything gained by doing analytical calculations? Yes. The goal in doing these problems is to gain insight. A computer program is a black box: you put projections in and out comes an image, but you don’t know what happens in between. Analytical calculations force you to work through each step. Please don’t skip the plots in parts (a) and (c), and the comparison of the plots in part (d), otherwise you defeat the purpose; all you have done is an exercise in calculus and you learn nothing about tomography.

I wish I had come up with this example six months ago, so Russ and I could have included it in the 5th edition of IPMB. But it’s too late, as the page proofs have already been corrected and the book will be printed soon. This new homework problem will have to wait for the 6th edition!

*Incidentally, Prob. 12.32 has a typo in the second line: "|x|" should really be "√(x2 +y2)".