where a, b, c, and d are constants. You can determine these constants by requiring that f(x,y) is equal to the known data at points (xi,yj), (xi+1,yj), (xi,yj+1), and (xi+1,yj+1):

Solving these four equations for the four unknowns a, b, c, and d, plugging those values into the equation for f(x,y), and then doing a bit of algebra gives you

where xi+1 = xi + Δx and yj+1 = yj + Δy. To see why this makes sense, let x = xi and y = yj. In that case, the last three terms in this expression go to zero, and the first term reduces to f(xi,yj), just as you would want an interpolation function to behave. As you can check for yourself, this is true of all four data points. If you hold y fixed then the function is a linear function of x, and if you hold x fixed then the function is a linear function of y. If you assume y = e x, then the function is quadratic.

If you want to try it yourself, see http://www.ajdesigner.com/phpinterpolation/bilinear_interpolation_equation.php

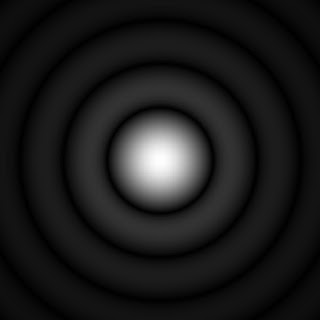

In the 4th edition of Intermediate Physics for Medicine and Biology, Russ Hobbie and I introduce bilinear interpolation in Problem 20 of Chapter 12, in the context of computed tomography. In CT, you obtain the Fourier transform of the image at points in a polar coordinate grid ki, θj. In other words, the points lie on concentric circles in the spatial frequency plane, each of radius ki. In order to compute a numerical two-dimensional Fourier reconstruction to recover the image, one needs the Fourier transform on a Cartesian grid kx,n, ky,m. Thus, one needs to interpolate from data at ki, θj to kx,n, ky,m. In Problem 20, we suggest doing this using bilinear interpolation, and ask the reader to perform a numerical example.

I like bilinear interpolation, because it is simple, intuitive, and often “good enough.” But it is not necessarily the best way to proceed. Tomogrphic methods arise not only in CT but also in synthetic aperture radar (SAR) (see: Munson, D. C., J. D. O’Brien, and W. K. Jenkins (1983) “A Tomographic Formulation of Spotlight-Mode Synthetic Aperture Radar,” Proceedings of the IEEE, Volume 71, Pages 917–925). In their conference proceeding paper “A Comparison of Algorithms for Polar-to-Cartesian Interpolation in Spotlight Mode SAR” (IEEE International Conference on Acoustics, Speech and Signal Processing '85, Volume 10, Pages 1364–1367, 1985), Munson et al. write

Given the polar Fourier samples, one method of image reconstruction is to interpolate these samples to a cartesian grid, apply a 2-D inverse FFT, and to then display the magnitude of the result. The polar-to-cartesian interpolation operation must be of extremely high quality to prevent aliasing . . . In an actual system implementation the interpolation operation may be much more computationally expensive than the FFT. Thus, a problem of considerable importance is the design of algorithms for polar-to-cartesian interpolation that provide a desirable quality/computational complexity tradeoff.Along the same lines, O’Sullivan (“A Fast Sinc Function Gridding Algorithm for Fourier Inversion in Computer Tomography,” IEEE Trans. Medical Imaging, Volume 4, Pages 200–207, 1985) writes

Application of Fourier transform reconstruction methods is limited by the perceived difficulty of interpolation from the measured polar or other grid to the Cartesian grid required for efficient computation of the Fourier transform. Various interpolation schemes have been considered, such as nearest-neighbor, bilinear interpolation, and truncated sinc function FIR interpolators [3]-[5]. In all cases there is a tradeoff between the computational effort required for the interpolation and the level of artifacts in the final image produced by faulty interpolation.There has been considerable study of this problem. For instance, see

Stark et al. (1981) “Direct Fourier reconstruction in computer tomography,” IEEE Trans. Acoustics, Speech, and Signal Processing, Volume 29, Pages 237–245.Going into the details of this topic would take me into more deeply into signal processing than I am comfortable with. Hopefully, Problem 20 in IPMB will give you a flavor for what sort of interpolation needs to be done, and the references given in this blog entry can provide an entry to more detailed analysis.

Moraski, K. J. and D. C. Munson (1991) “Fast tomographic reconstruction using chirp-z interpolation,” 1991 Conference Record of the Twenty-Fifth Asilomar Conference on Signals, Systems and Computers, Volume 2, Pages 1052–1056.