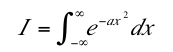

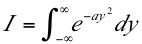

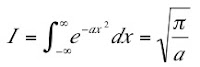

Russ and I write the logistic equation as (Eq. 10.36 in our book)

xj+1 = a xj (1 – xj)

where xj is the population in the jth generation. Our first task is to determine the equilibrium value for xj.

The equilibrium value x* can be obtained by solving Eq. 10.36 with xj+1 = xj = x*:Usually, when Russ and I say something like “it turns out”, we include a homework problem to verify the result. Homework 34 in Chapter 10 does just this; the reader must prove that the magnitude of the slope is greater than 1 for a greater than 3.

x* = a x* (1 – x*) = 1 – 1/a.

Point x* can be interpreted graphically as the intersection of Eq. 10.36 with the equation xj+1 = xj as shown in Fig. 10.22. You can see from either the graph or from Eq. 10.37 that there is no solution for positive x if a is less than 1. For a = 1 the solution occurs at x* = 0. For a = 3 the equilibrium solution is x* = 2/3. Figure 10.23 shows how, for a = 2.9 and an initial value x0 = 0.2, the values of xj approach the equilibrium value x* = 0.655. This equilibrium point is called an attractor.

Figure 10.23 also shows the remarkable behavior that results when a is increased to 3.1. The values of xj do not come to equilibrium. Rather, they oscillate about the former equilibrium value, taking on first a larger value then a smaller value. This is called a period-2 cycle. The behavior of the map has undergone period doubling. What is different about this value of a? Nothing looks strange about Fig. 10.22. But it turns out that if we consider the slope of the graph of xj+1 vs xj at x*, we find that for a greater than 3 the slope of the curve at the intersection has a magnitude greater than 1.

One theme of Intermediate Physics for Medicine and Biology is the use of simple, elementary examples to illustrate fundamental ideas. I like to search for such examples to use in homework problems. One example that has great biological and medical relevance is discussed in Problems 37 and 38 (a model for cardiac electrical dynamics based on the idea of action potential restitution). But when reading May’s review in Nature, I found another example that—while it doesn’t have much direct biological relevance—is as simple or even simpler than the logistic map. Below is a homework problem based on May’s example.

Section 10.8

Problem 33 ½ Consider the difference equation

(a) Plot xn+1 versus xn for the case of a=3/2, producing a figure analogous to Fig. 10.22.

(b) Find the range of values of a for which the solution for large n does not diverge to infinity or decay to 0. You can do this using either arguments based on plots like in part (a), or using numerical examples.

(c) Find the equilibrium value x* as a function of a, using a method similar to that in Eq. 10.37.

(d) Determine if this equilibrium value is stable or unstable, based on the magnitude of the slope of the xn+1 versus xn curve.

(e) For a = 3/2, calculate the first 20 values of xn using 0.250 and 0.251 as initial conditions. Be sure to carry your calculations out to at least five significant figures. Do the results appear to be chaotic? Are the results sensitive to the initial conditions?

(f) For one of the data sets generated in part (e), plot xn+1 versus xn for 25 values of n, and create a plot analogous to Fig. 10.27. Explain how you could use this plot to distinguish chaotic data from a random list of numbers between zero and one.