Can

magnetic resonance imaging detect electrical activity in your brain? If so, it would be a breakthrough in neural recording, providing better spatial resolution than

electroencephalography or

magnetoencephalography.

Functional magnetic resonance imaging (fMRI) already is used to detect brain activity, but it records changes in blood flow (

BOLD, or blood-oxygen-level-dependent, imaging), which is an indirect measure of electrical signaling. MRI ought to be able to detect brain function directly; bioelectric currents produce their own biomagnetic fields that should affect a magnetic resonance image.

Russ Hobbie and I discuss this possibility in Section 18.12 of

Intermediate Physics for Medicine and Biology.

The magnetic field produced in the brain is tiny; a

nanotesla or less. In an article I wrote with my friend

Ranjith Wijesinghe of

Ball State University and his students (

Medical and Biological Engineering and Computing,

Volume 50, Pages 651‐657, 2012), we concluded

MRI measurements of neural

currents in dendrites [of neurons] may be barely detectable using current

technology in extreme cases such as seizures, but the

chance of detecting normal brain function is very small.

Nevertheless, MRI researchers continue to develop clever

new imaging methods, using either sophisticated pulse

sequences or data processing. Hopefully, this paper will

outline the challenges that must be overcome in order to

image dendritic activity using MRI.

|

Truong et al. (2019) “Toward Direct

MRI of Neuro-Electro-Magnetic

Oscillations in the Human Brain,”

Magn. Reson. Med. 81:3462-3475. |

Since we published those words seven years ago, has anyone developed a clever pulse sequence or a fancy data processing method that allows imaging of biomagnetic fields in the brain? Yes! Or, at least, maybe. Researchers in

Allen Song’s laboratory published a paper titled “

Toward Direct MRI of Neuro-Electro-Magnetic Oscillations in the Human Brain” in the June 2019 issue of

Magnetic Resonance in Medicine. I reproduce the abstract below.

Purpose: Neuroimaging techniques are widely used to investigate the function of the

human brain, but none are currently able to accurately localize neuronal activity with

both high spatial and temporal specificity. Here, a new in vivo MRI acquisition and

analysis technique based on the spin-lock mechanism is developed to noninvasively

image local magnetic field oscillations resulting from neuroelectric activity in specifiable

frequency bands.

Methods: Simulations, phantom experiments, and in vivo experiments using an

eyes-open/eyes-closed task in 8 healthy volunteers were performed to demonstrate

its sensitivity and specificity for detecting oscillatory neuroelectric activity in the

alpha‐band (8‐12 Hz). A comprehensive postprocessing procedure was designed to

enhance the neuroelectric signal, while minimizing any residual hemodynamic and

physiological confounds.

Results: The phantom results show that this technique can detect 0.06-nT magnetic

field oscillations, while the in vivo results demonstrate that it can image task-based

modulations of neuroelectric oscillatory activity in the alpha-band. Multiple control

experiments and a comparison with conventional BOLD functional MRI suggest that

the activation was likely not due to any residual hemodynamic or physiological

confounds.

Conclusion: These initial results provide evidence suggesting that this new technique

has the potential to noninvasively and directly image neuroelectric activity in

the human brain in vivo. With further development, this approach offers the promise

of being able to do so with a combination of spatial and temporal specificity that is

beyond what can be achieved with existing neuroimaging methods, which can advance

our ability to study the functions and dysfunctions of the human brain.

I’ve been skeptical of work by

Song and his team in the past; see for instance my article with

Peter Basser (

Magn. Reson. Med. 61:59‐64, 2009) critiquing their “

Lorentz Effect Imaging” idea. However, I’m optimistic about this recent work. I’m not expert enough in MRI to judge all the technical details—and there are

lots of technical details—but the work appears sound.

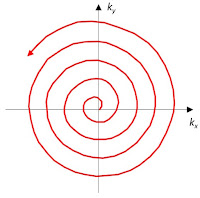

The key to their method is “spin-lock,” which I

discussed before in this blog. To understand spin-lock, let’s compare it with a typical MRI π/2 pulse (see Section 18.5 in

IPMB). Initially, the spins are in equilibrium along a static magnetic field

Bz (blue in the left panel of the figure below). To be useful for imaging, you must rotate the spins into the

x-

y plane so they

precess about the

z axis at the

Larmor frequency (typically a

radio frequency of many

Megahertz, with the exact frequency depending on

Bz). If you apply an oscillating magnetic field

Bx (red) perpendicular to

Bz, with a frequency equal to the Larmor frequency and for just the right duration, you will rotate all the spins into the

x-

y plane (green). The behavior is simpler if instead of viewing it from the static laboratory frame of reference (

x,

y,

z) we view it from a frame of reference rotating at the Larmor frequency (

x',

y',

z'). At the end of the π/2 pulse the spins point in the

y' direction and appear static (they will eventually

relax back to equilibrium, but we ignore that slow process in this discussion). If you’re having trouble visualizing the rotating frame, see Fig. 18.7 in

IPMB; the

z and

z' axes are the same and it’s the

x-

y plane that’s rotating.

After the π/2 pulse, you would normally continue your pulse sequence by measuring the

free induction decay or creating an

echo. In a spin-lock experiment, however, after the π/2 pulse ends you apply a circularly polarized magnetic field

By' (blue in the right panel) at the Larmor frequency. In the rotating frame,

By' appears static along the

y' direction. Now you have a situation in the rotating frame that’s similar to the situation you had originally in the laboratory frame: a static magnetic field and spins aligned with it seemingly in equilibrium. What magnetic field during the spin-lock plays the role of the radiofrequency field during the π/2 pulse? You need a magnetic field in the

z' direction that oscillates at the spin-lock frequency; the frequency that spins precess about

By'. An oscillating neural magnetic field

Bneural (red) would do the job. It must oscillate at the spin-lock frequency, which depends on the strength of the circularly polarized magnetic field

By'.

Song and his team adjusted the magnitude of

By' so the spin-lock frequency matched the frequency of

alpha waves in the brain (about 10 Hz). This causes the spins to rotate from

y' to

z' (green). Once you accumulate spins in the

z' direction, turn off the spin lock and you are back to where you started (spins in the

z direction in a static field

Bz), except that the number of these spins depends on the strength of the neural magnetic field and the duration of the spin-lock. A neural magnetic field not at

resonance—that is, at any other frequency other than the spin-lock frequency—will not rotate spins to the

z' axis. You now have an exquisitely sensitive method of detecting an oscillating biomagnetic field, analogous to using a

lock-in amplifier to

isolate a particular frequency in a signal.

|

| Comparison of a π/2 pulse and spin-lock. |

There’s a lot more to

Song’s method than I’ve described, including complicated techniques to eliminate any contaminating BOLD signal. But it seems to work.

Will this method revolutionize neural imaging? Time will tell. I worry about how well it can detect neural fields that are not oscillating at a single frequency. Nevertheless, Song’s experiment—together with the

work from Okada’s lab that I discussed three years ago—may mean we’re on the verge of something big: using MRI to directly measure neural magnetic fields.

In the conclusion of their article, Song and his coworkers strike an appropriate balance between acknowledging the limitations of their method and speculating about its potential. Let’s hope their final sentence comes true.

Our initial results provide evidence suggesting

that MRI can be used to noninvasively and directly

image neuroelectric oscillations in the human brain in vivo.

This new technique should not be viewed as being aimed at

replacing existing neuroimaging techniques, which can address

a wide range of questions, but it is expected to be able

to image functionally important neural activity in ways that

no other technique can currently achieve. Specifically, it is

designed to directly image neuroelectric activity, and in particular

oscillatory neuroelectric activity, which BOLD fMRI

cannot directly sample, because it is intrinsically limited by

the temporal smear and temporal delay of the hemodynamic

response. Furthermore, it has the potential to do so with a

high and unambiguous spatial specificity, which EEG/MEG

cannot achieve, because of the limitations of the inverse problem.

We expect that our technique can be extended and optimized

to directly image a broad range of intrinsic and driven

neuronal oscillations, thereby advancing our ability to study

neuronal processes, both functional and dysfunctional, in the

human brain.